Email security

Aug. 26th, 2013 11:13 amMail encryption

If privacy of your email matters to you, you may want to consider using end-to-end encryption. This is different from using a “secure” email provider: With end-to-end encryption, the sender of the email encrypts it before sending, and the receiver decrypts it after receiving it. It's not decrypted during transit—thus, even your email provider cannot read your email.

Without going into mathematics whose details I’d have to review anyway, suffice to say that PGP is a strong system of email security that, with sensible (default!) settings, cannot be effectively broken with modern technology and modern mathematics.¹ (Strong enough, in fact, that various US security agencies tried to suppress it, and when its creator released it for free to the world, he was taken to court and accused of exporting munitions. The case never really went anywhere.)

PGP uses something called asymmetric encryption. Technical details aside, the nifty and amazing thing about it is it’s what’s known as a public key cryptosystem, meaning that I can give you a bit of password (public) key that you can use to encrypt messages for me, but no one², not even you, can decrypt them…except for me, as I retain a special (private) key with the unique power to decrypt. My public key is here, should you wish to send me secure email.

My preferred solution is a plugin called Enigmail for my mail client of choice, Thunderbird.

PGP with Enigmail in Windows

- Install Thunderbird.

- Download GpG4Win, an OpenPGP encryption system in its Windows version.

- Install Enigmail (the easiest way is, in Thunderbird, go to Tools→Add-ons and search for Enigmail).

There are some other solutions, none of which I have used.

PGP for webmail (like GMail) in Chrome

There's a browser plugin, currently for Chrome only though Firefox is in the works, called Mailvelope that will transparently encrypt/decrypt your webmail. There's a helpful guide. For now, there’s another plugin for Firefox, but it has received mixed reviews.

Linux

If you’re using Linux, this shouldn’t be a problem in the first place. Install your mail client of choice and it surely comes with or has an OpenPGP plugin readily available.

Other

Apparently there's an Outlook plugin. There’s a plugin for OS X Mail.app.

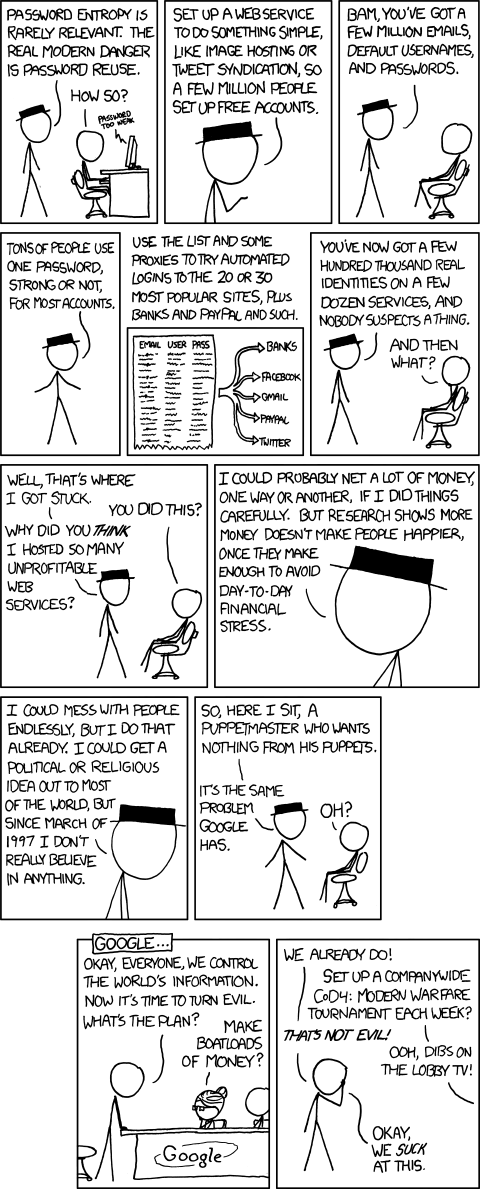

Passwords

As a reminder, keep in mind that your security is never stronger than your password; and your password is never safer than the least secure place it is stored. If your password is weak (password, your name, your date of birth…) there’s no helping you. If your password is pretty strong (|%G7j>uT^|:_Z5-F), then that’s no help at all if you used it on LinkedIn when they were hacked and their password database stolen, so that any malicious hacker can just download a database of email addresses paired with their passwords.

The solution is to use strong passwords, and to only use each password in one place—if you steal my LinkedIn password you can hack my LinkedIn account, but you can’t use that password to access my bank, email, blog, or any other account. The drawback of strong, single-use passwords is that you’ll never, ever remember them. The counter is to use software to remember them for you.

My preferred solution is a family of software called KeePass for Windows, KeePassX for Linux, KeePassDroid for Android (my smartphone), and so on. This has the primary feature of storing all my passwords in a very strongly encrypted file. I store this file in Dropbox, which lets me share it between my devices (work computer, home computer, laptop, phone). I don’t consider Dropbox fully trustworthy, but that’s OK: if anyone breaks into my Dropbox account, all they’ll get is a very strongly encrypted archive that requires either major breakthroughs or billions of years to break into (or a side-channel attack like reading over my shoulder, literally or electronically; but if they can do that my passwords don’t matter anyway). Thanks to this, I can use very strong passwords (like -?YhS\[q@V4#]F'/L|#*1z)_S".35/#T), uniquely per website or other service. Meanwhile, I only have to remember one password: the password for KeePass itself (which I shouldn’t use for anything else).

The KeePass family of software also tend to come with good password generators, which can generate garbled gobbledygook like the above, and/or include constraints if a given website won’t let you use special characters (e.g. you can tell it to generate a random password 10 characters long with letters and digits but nothing else, which includes at least one letter and one digit). You can also use it to store files, which is a nice way to keep track of things like PGP keys.

¹ It may be broken in the future if usefully sized quantum computers are ever build or if a major mathematical breakthrough is made in prime factorisation—mathematicians have failed to make this breakthrough for some centuries now. If this happens, then I still wouldn’t worry about my personal email: the communications of major world governments, militaries, and corporations will become as vulnerable as mine, and will be a lot more interesting.

² That is to say, there are no direct attacks known to be effective against the ciphers used. There are side channel attacks, which is a fancy way of saying that someone could break your encryption without defeating the mechanism. For example, they could be reading over your shoulder, or they could install malware on your computer that records keystrokes (when you type in passwords), or they could beat you with a $5 wrench until you talk.